Who Should Handle CORS: Your Backend or Your Infrastructure?

Why moving CORS handling to infrastructure helps in production — but hurts locally.

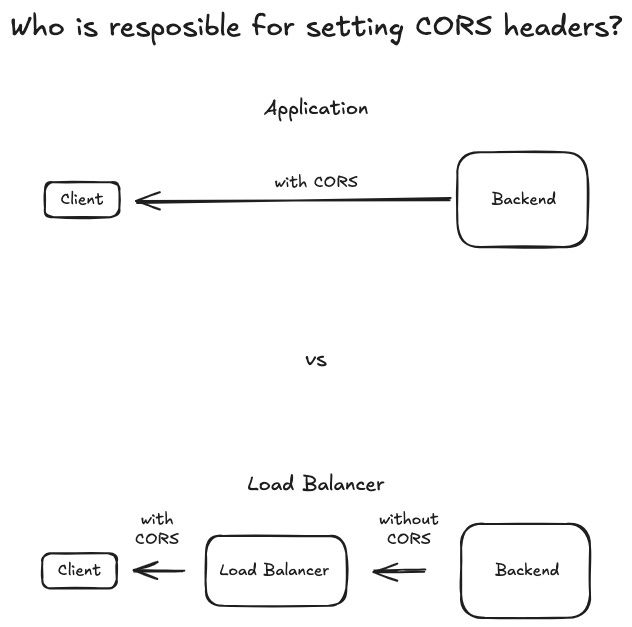

A backend contains business logic and interfaces to the outside world. Oftentimes, the advice is to extract as many non-business-logic responsibilities from the backend application as possible and move them to standalone components in the infrastructure.

An example is CORS headers: every response from the backend to a client should contain the Access-Control-Allow-Origin header to inform the client (browser) whether the frontend is allowed to call the backend.

This can be handled in the backend. Most frameworks — like Hono — provide pre-built middleware for this purpose. Just call app.use(cors()) in your main function, and everything will work.

Another approach is to let the load balancer (e.g., Nginx) set the headers. In this case, the backend application is not even aware of the concept of CORS.

If you want to compare the two approaches, you have to consider two cases:

Deployed system (development and production environment in the cloud)

Local development setup

Let’s start with the deployed system:

When a browser makes a request — for example, a POST request to the backend — and the backend is not on the exact same URL and port as the frontend, it will first send a preflighted OPTIONS request to the backend. The backend must respond — among other things — with the CORS headers like Access-Control-Allow-Origin. If everything is correct, the browser will then send the actual POST request.

Load balancers are capable of caching OPTIONS requests, so there will be far fewer requests to the backend.

Additionally, if there is a separate infrastructure team from the application team, setting the correct CORS headers could be enforced company-wide, and the application team doesn’t have to worry about it.

Now let’s look at the local development setup:

If you let the load balancer handle CORS, it won’t work when running your frontend and backend locally. Your browser will complain that there are no CORS headers. Thus, you have to set up a local load balancer like Nginx. In this setup, your frontend will call the load balancer, which will then forward the request to your backend.

This introduces more complexity.

How do you want to run the load balancer? As a system service in your Linux OS? As a Docker container? In a Docker Compose setup?

Who will configure and maintain the load balancer configuration? How do you keep it in sync across all developer machines?

If you use orchestrator frameworks like Vercel’s Turborepo for Node.js, it's more complicated to integrate the lifecycle of non-Node.js systems such as Nginx into the task dependency graph.

In production environments, it's generally preferable to handle CORS at the infrastructure level (e.g., via nginx or an API gateway). This centralizes configuration, allows for caching of preflight requests (if configured), and enforces organization-wide security policies without burdening the application team.

However, in local development, requiring a load balancer adds unnecessary complexity. Developers must manage extra tooling and keep configurations synchronized across environments. Instead, handling CORS within the backend application using middleware (like app.use(cors()) in Hono) simplifies the development setup and improves the developer experience (DX).

Suggested approach:

Use a hybrid strategy:

In development, implement CORS in the backend for simplicity.

In production, shift CORS to infrastructure for scalability, consistency, and better separation of concerns.

Do you think the infrastructure or the backend application should handle CORS?